Database design is a critical aspect of any software development project. Whether you are building a small application or a complex enterprise system, the quality of your database design can greatly impact the performance, scalability, and maintainability of your application.

So, what exactly is good database design? In simple terms, it refers to the process of creating a well-structured and efficient database that meets the requirements of your application. A good database design ensures data integrity, eliminates redundancy, and allows for easy data retrieval and manipulation.

Good database design is essential to ensure data accuracy, consistency, and integrity and that databases are efficient, reliable, and easy to use. The design must address the storing and retrieving of data quickly and easily while handling large volumes of data in a stable way. An experienced database designer can create a robust, scalable, and secure database architecture that meets the needs of modern data systems.

Architecture and Design

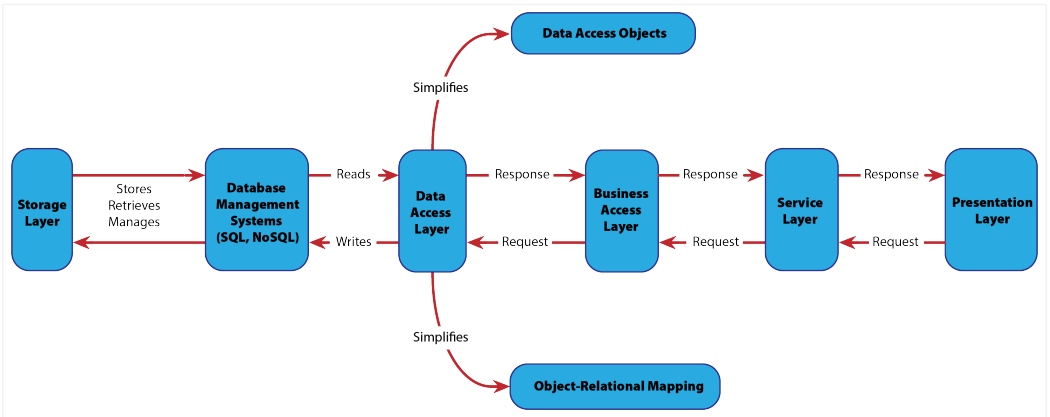

A modern data architecture for microservices and cloud-native applications involves multiple layers, and each one has its own set of components and preferred technologies. Typically, the foundational layer is constructed as a storage layer, encompassing one or more databases such as SQL, NoSQL, or NewSQL. This layer assumes responsibility for the storage, retrieval, and management of data, including tasks like indexing, querying, and transaction management.

To enhance this architecture, it is advantageous to design a data access layer that resides above the storage layer but below the service layer. This data access layer leverages technologies like object-relational mapping or data access objects to simplify data retrieval and manipulation. Finally, at the topmost layer lies the presentation layer, where the information is skillfully presented to the end user. The effective transmission of data through the layers of an application, culminating in its presentation as meaningful information to users, is of utmost importance in a modern data architecture.

The goal here is to design a scalable database with the ability to handle a high volume of traffic and data while minimizing downtime and performance issues. By following best practices and addressing a few challenges, we can meet the needs of today’s modern data architecture for different applications.

Key Principles of Good Database Design

There are several key principles that guide good database design:

- Normalization: Normalization is the process of organizing data in a database to eliminate redundancy and dependency. It involves breaking down large tables into smaller, more manageable ones and establishing relationships between them.

- Data Integrity: Data integrity refers to the accuracy, consistency, and reliability of data stored in a database. A good database design includes constraints, such as primary keys, foreign keys, and unique constraints, to ensure data integrity.

- Efficiency: A well-designed database should be efficient in terms of storage and performance. This includes optimizing data types, indexing frequently accessed columns, and minimizing data duplication.

- Scalability: Good database design allows for easy scalability, meaning the database can handle increasing amounts of data and users without sacrificing performance. This involves proper indexing, partitioning, and use of caching techniques.

- Maintainability: A good database design is easy to maintain and modify. It should be modular, with clear separation of concerns, and adhere to industry best practices and standards.

Considerations

By taking into account the following considerations when designing a database for enterprise-level usage, it is possible to create a robust and efficient system that meets the specific needs of the organization while ensuring data integrity, availability, security, and scalability.

One important consideration is the data that will be stored in the database. This involves assessing the format, size, complexity, and relationships between data entities. Different types of data may require specific storage structures and data models. For instance, transactional data often fits well with a relational database model, while unstructured data like images or videos may require a NoSQL database model.

The frequency of data retrieval or access plays a significant role in determining the design considerations. In read-heavy systems, implementing a cache for frequently accessed data can enhance query response times. Conversely, the emphasis may be on lower data retrieval frequencies for data warehouse scenarios. Techniques such as indexing, caching, and partitioning can be employed to optimize query performance.

Ensuring the availability of the database is crucial for maintaining optimal application performance. Techniques such as replication, load balancing, and failover are commonly used to achieve high availability. Additionally, having a robust disaster recovery plan in place adds an extra layer of protection to the overall database system.

As data volumes grow, it is essential that the database system can handle increased loads without compromising performance. Employing techniques like partitioning, sharding, and clustering allows for effective scalability within a database system. These approaches enable the efficient distribution of data and workload across multiple servers or nodes.

Data security is a critical consideration in modern database design, given the rising prevalence of fraud and data breaches. Implementing robust access controls, encryption mechanisms for sensitive personally identifiable information, and conducting regular audits are vital for enhancing the security of a database system.

In transaction-heavy systems, maintaining consistency in transactional data is paramount. Many databases provide features such as appropriate locking mechanisms and transaction isolation levels to ensure data integrity and consistency. These features help to prevent issues like concurrent data modifications and inconsistencies.

Challenges

Determining the most suitable tool or technology for our database needs can be a challenge due to the rapid growth and evolving nature of the database landscape. With different types of databases emerging daily and even variations among vendors offering the same type, it is crucial to plan carefully based on your specific use cases and requirements.

By thoroughly understanding our needs and researching the available options, we can identify the right tool with the appropriate features to meet our database needs effectively.

Polyglot persistence is a consideration that arises from the demand of certain applications, leading to the use of multiple SQL or NoSQL databases. Selecting the right databases for transactional systems, ensuring data consistency, handling financial data, and accommodating high data volumes pose challenges. Careful consideration is necessary to choose the appropriate databases that can fulfill the specific requirements of each aspect while maintaining overall system integrity.

Integrating data from different upstream systems, each with its own structure and volume, presents a significant challenge. The goal is to achieve a single source of truth by harmonizing and integrating the data effectively. This process requires comprehensive planning to ensure compatibility and future-proofing the integration solution to accommodate potential changes and updates.

Performance is an ongoing concern in both applications and database systems. Every addition to the database system can potentially impact performance. To address performance issues, it is essential to follow best practices when adding, managing, and purging data, as well as properly indexing, partitioning, and implementing encryption techniques. By employing these practices, you can mitigate performance bottlenecks and optimize the overall performance of your database system.

Considering these factors will contribute to making informed decisions and designing an efficient and effective database system for your specific requirements.

The Benefits of Good Database Design

Investing time and effort into good database design can bring several benefits:

- Improved Performance: A well-designed database can significantly improve application performance by reducing the time required for data retrieval and manipulation operations.

- Enhanced Data Integrity: Good database design ensures data accuracy and consistency, reducing the risk of data corruption and ensuring reliable information.

- Reduced Redundancy: By eliminating data redundancy, a good database design minimizes storage requirements and reduces the chances of data inconsistencies.

- Easier Maintenance: A well-structured database is easier to maintain and modify, reducing the time and effort required for future updates or enhancements.

- Scalability: Good database design allows for easy scalability, ensuring that the application can handle increasing data volumes and user loads without performance degradation.

Conclusion

Good database design is essential for building robust and efficient software applications. It ensures data integrity, improves performance, reduces redundancy, and allows for easy maintenance and scalability. By following the key principles of normalization, data integrity, efficiency, scalability, and maintainability, you can create a database that meets the requirements of your application and sets the foundation for a successful software project.